Cloud Environments for Animal Pose Estimation

Software Engineer Internship @ Allen Institute

Overview

Currently, scientists download data from the cloud onto their local workstations to perform pose-estimation analyses. However, this approach lacks reproducibility and is limited by factors such as insufficient local storage and the lack of GPU capabilities. The AWS AppStream 2.0 service provides a cloud alternative where scientists may perform pose estimation analyses on the cloud using standardized environments with access to large amounts of cloud data.

Transition to this new pipeline is seamless for users. Once experimental data is stored within S3 buckets, they can log into their AppStream account to dive straight into analysis. The pose estimation softwares are pre-installed and common data buckets are conveniently mounted, eliminating any setup burdens for users. Upon completing analysis, the results may be saved to their unique S3 home buckets. These home buckets can be integrated into existing analysis pipelines, such as Code Ocean, for further processing.

One advantage of this new pipeline lies in executing all GPU-intensive tasks within the AppStream instance, leading to more cost-effective downstream applications. Moreover, the software’s user interface within AppStream mirrors the user experience one would have on the local computer, ensuring a comfortable transition. Remarkably, users can engage with this service without an AWS account; registration by the admin is all that’s needed. With this, users gain access to their own AppStream account, conveniently linked to their email address.

To provide the AppStream service to users, developers first construct images that encapsulated the required softwares, acheived by installing the softwares within an image builder instance — akin to the usual software installation procedure. Subsequently, streaming instances are creased based on the image, and distributed to users.

AppStream significantly reduces the developer’s maintainene workload. Rather than overseeing numerous virtual machine instances individually, developers solely need to focus on maintaining and updating these images. As a result, the process becomes more efficient and manageable, allowing developers to invest more time in optimizing the user experience and expandingthe range of software offerings, rather than navigating the complexities of virtual machine management.

Proof of Concept

As a demonstration of the AppStream pipeline, I trained pose estimation models using both SLEAP and DeepLabCut (DLC) on AppStream. The results were then compared to each other.

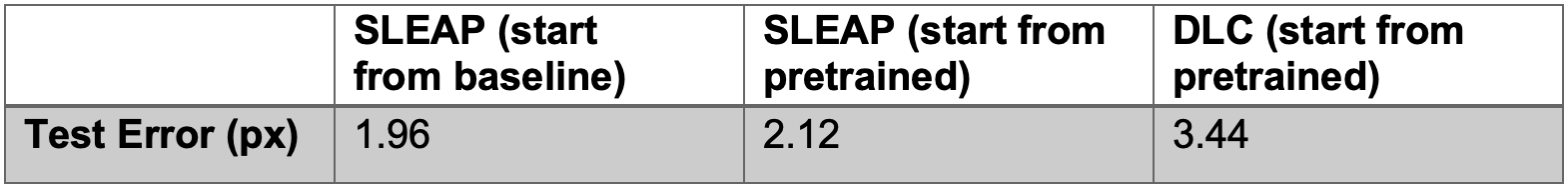

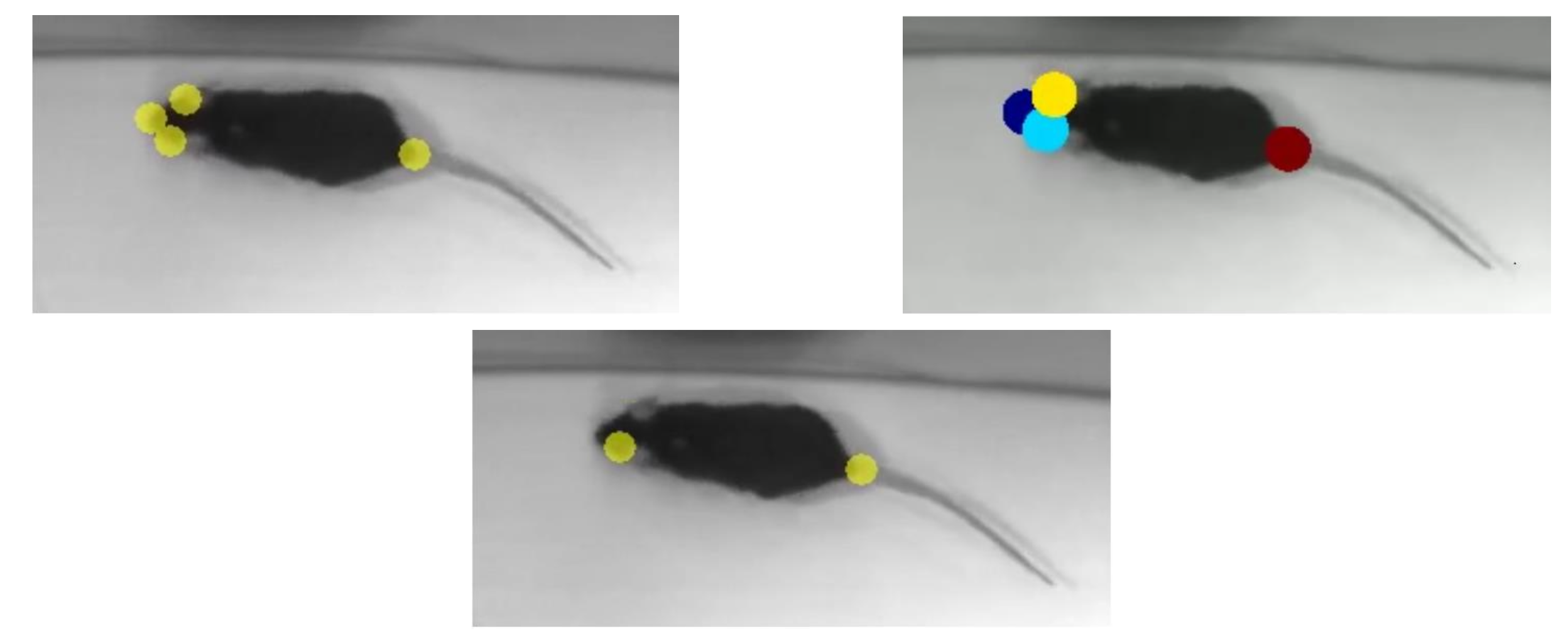

On DLC, I trained a deep residual network with 50 layers (ResNet-50) and used pre-trained initial weights. On SLEAP, I trained with a U-Net backbone, both with and without pre-trained encoder weights for initialization. The video and labeled frames were imported from DLC open field data 1, and an 80-20 train-test split was performed. Test pixel error is computed as the average difference between the predicted labels and the ground truth labels of the test frames.

While the test pixel errors suggest the SLEAP model trained from baseline weights excelled and the DLC model underperformed, visual evidence contradicts this notion, as demonstrated by the screenshots which reveal both these models generating reasonable predictions when applied to the entire video. Conversely, the SLEAP model trained from pretrained encoder weights exhibited poor performance, evident from the omission of two body parts in the screenshot below and frequent mislabeling of distant body parts in various instances not shown here.

Resources

User and developer guides are available at the Cloud Pose Estimation GitHub